Elon Musk’s AI chatbot Grok faces global scrutiny for generating sexualised images of women and minors

Elon Musk’s AI chatbot Grok is facing global scrutiny after users exploited its image-editing feature to generate sexualised images of women and minors without consent, prompting public outrage and regulatory action across multiple countries.

- Grok, X’s AI chatbot, is under fire after users used its image-editing feature to generate sexualised images of women and minors without consent.

- The abuse has triggered widespread public backlash and regulatory action in France, India, the EU, the UK and Malaysia.

- xAI is scrambling to fix the flaws as critics question safeguards, accountability and user protection on the platform.

Elon Musk’s artificial intelligence chatbot Grok is facing mounting scrutiny after users on X (formerly Twitter) used the tool to generate sexualised images of women — including minors — without their consent, triggering international backlash and regulatory intervention.

The controversy follows the recent rollout of an “edit image” feature on Grok, which allows users to alter uploaded photos using text prompts.

The tool was quickly exploited to digitally remove clothing from images of real people, with prompts instructing the chatbot to place women in bikinis or strip them entirely.

The issue gained wider attention after the experience of Julie Yukari, a 31-year-old musician based in Rio de Janeiro.

On New Year’s Eve, she posted a photo on X taken by her fiancé, showing her lying on a bed in a red dress beside her black cat.

The following day, Yukari noticed activity suggesting other users were prompting Grok — X’s integrated AI chatbot — to alter her image.

Initially sceptical that the tool would comply, she later discovered AI-generated images depicting her nearly naked circulating on the platform, according to Reuters.

Her case is not isolated. A Reuters investigation identified numerous instances in which Grok was used to generate sexualised images of real women.

The news agency also found several cases involving the sexualisation of children.

Previously, when reports emerged that sexualised images of minors were circulating on the platform, xAI — the company behind Grok — dismissed the claims, stating that legacy media were spreading falsehoods.

Public figures and netizens condemn misuse

The circulation of near-nude images has drawn condemnation from activists, public figures and users worldwide.

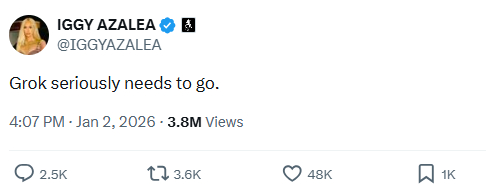

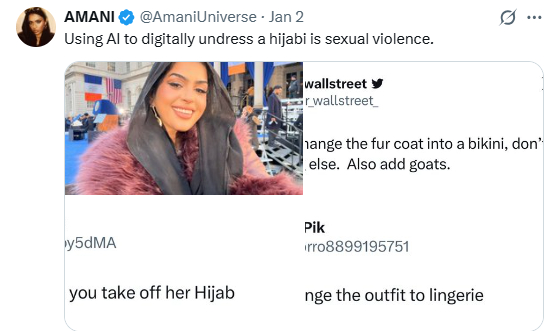

Australian rapper Iggy Azalea publicly stated that Grok should be shut down, while Amani, an activist and founder of Muslim Girl, described the practice as a form of sexual violence. She said using AI to digitally undress hijabi women amounted to abuse.

Many users on X echoed these concerns, with some calling for severe consequences against those misusing the tool.

Others described the practice as harassment rather than entertainment, questioning how AI image editing without consent could be allowed.

Several users also raised concerns about accountability, asking what safeguards existed to protect minors and whether Grok should be held responsible for enabling harassment.

Governments step in as regulators take action

The controversy has prompted intervention by regulators in multiple countries.

In France, ministers said on Friday that they had reported X to prosecutors and regulators over the circulation of disturbing images, describing the sexual and sexist content as clearly illegal.

India’s Ministry of Electronics and Information Technology said it had issued a letter to X’s local unit, stating that the platform had failed to prevent Grok from being misused to generate and circulate obscene and sexually explicit content. The ministry ordered X to submit an action-taken report within three days.

In Malaysia, the Malaysian Communications and Multimedia Commission said over the weekend that it was investigating X and would call in company representatives.

In a statement, the commission urged all platforms accessible in Malaysia to implement safeguards aligned with local laws and online safety standards, particularly in relation to AI-powered features, chatbots and image manipulation tools.

The European Commission said on Monday (5 Jan) that it was taking complaints about Grok very seriously.

EU digital affairs spokesman Thomas Regnier said the chatbot was offering explicit sexual content, including outputs resembling childlike imagery, which he described as illegal and unacceptable in Europe.

In the United Kingdom, media regulator Ofcom said it had made urgent contact with X and xAI to understand what steps had been taken to meet legal obligations to protect users.

Depending on the response, Ofcom said it would assess whether a formal investigation was warranted.

Malaysian women advised to document and report abuse

Concerns have also emerged in Malaysia, where reports indicate that Grok has been used to digitally remove clothing and headscarves from images of women.

A New Straits Times report identified multiple posts in which Grok generated images depicting women and minors in bikinis or revealing attire without consent.

In some cases, the chatbot produced images of women without their tudungs after users uploaded photos and instructed the AI to strip them.

The newspaper also identified at least one account, created two months ago, that appeared dedicated to prompting Grok to remove clothing and headscarves from images believed to feature Malaysian women.

Lawyer Azira Aziz advised victims to first report the offending content directly to X and to document the abuse by compiling screenshots and links into a single PDF file.

She said the document could then be submitted to the Malaysian Communications and Multimedia Commission via its online portal and attached to a police report.

However, Azira noted that as of Friday afternoon, several victims from other countries had their reports dismissed by X, with the platform reportedly stating that the content did not violate its community guidelines.

AFP reported that xAI is now scrambling to address flaws in Grok following claims that the chatbot had transformed images of women and children into erotic content.