Malaysia police warn of new AI “silent call” scam that clones victims’ voices

Malaysian authorities are alerting the public to a rising scam trend where fraudsters use AI to mimic a victim’s voice after obtaining just a few seconds of audio from silent phone calls.

MALAYSIA: Malaysian police are warning the public about a growing wave of “silent call” scams, where fraudsters use artificial intelligence to clone a victim’s voice and deceive their family members into transferring money.

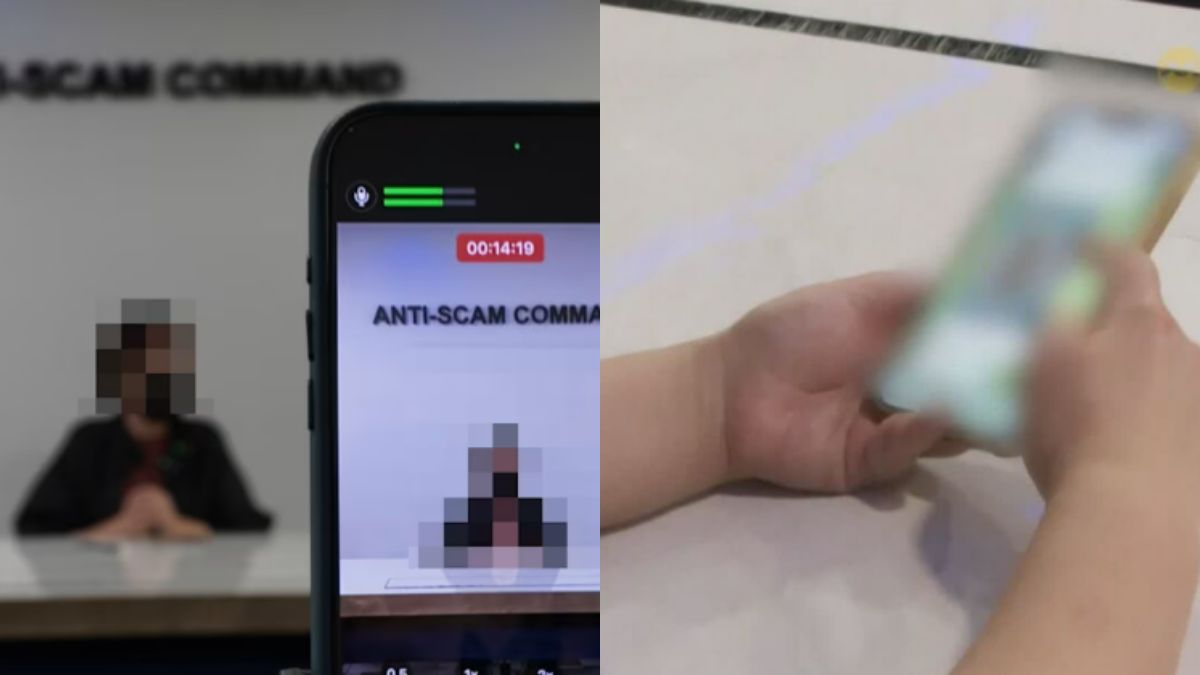

In a viral awareness video shared by Izzul Islam, Cheras Commercial Crime Investigation Department officer Inspector Tai Yong Keong explained that victims often receive calls that appear connected but have no audio.

When the victim responds with repeated greetings like “hello,” scammers capture three to five seconds of their voice — enough for AI tools to recreate a convincing digital clone.

Once scammers have the voice sample, they can generate audio messages or place calls mimicking the victim. They typically target family members, especially parents, pretending the victim is in urgent trouble and needs money immediately.

Inspector Tai said scammers imitate the victim’s tone, speaking style and emotional urgency, causing panicked relatives to transfer funds to third-party accounts.

A New Evolution in Scam Techniques

Speaking on a podcast hosted by KL Crime Prevention and Community Safety deputy head Assistant Commissioner Foo Chek Seng, Tai said the tactic represents a new evolution in commercial crime. AI has made impersonation scams significantly more believable, raising the risk for unsuspecting victims.

To reduce exposure, Tai advised the public not to speak first when answering unknown calls. “If someone truly intends to talk to you, they will introduce themselves first,” he said, urging Malaysians to avoid giving voice samples to unidentified callers.

He added that many Malaysians instinctively greet callers, unknowingly giving scammers usable audio.

Importance of Verification

Police also remind the public to maintain alternative communication channels with family members — such as secondary phone numbers, messaging apps, or agreed-upon code phrases — to verify emergency requests involving money.

With scammers increasingly relying on AI voice manipulation, Tai stressed that verification is now more crucial than ever.